This is the cerebellum. Its name means “little brain” — it’s a whole other brain under your “big” one.

If you vaguely remember something about what the cerebellum does, you’re probably thinking something to do with balance. Medical students have to learn the “cerebellar gait” that results from cerebellar injury (it’s the same staggering gait that drunk people have, because alcohol impairs the cerebellum.)

A more detailed neurological exam of a patient with cerebellar disease shows a wider variety of motor problems.

Here you’ll notice that the patient can’t bring his finger to his nose or clap his hands without a wobbling back-and-forth motion; that his eyes “wobble” back and forth (which is called nystagmus); and that he wobbles backwards and forwards while standing or walking, so that he nearly falls over and needs a broad-based gait to support himself.

In cerebellar disease, muscle tone is diminished (people are “floppy”), movements are not fluent (each individual sub-movement is separate), there’s dysmetria (failure to “aim” or estimate the distance to move, overshoot or undershoot) and there’s “intention tremor” (high amplitude, relatively slow wobbles that arise when the patient starts to move, as contrasted with the “resting tremor” characteristic of e.g. Parkinson’s disease.)

Clearly, the cerebellum does something to control movement, and movement is impaired when it is damaged. But why do we need a whole other “little brain” to control these aspects of movement?

There are already regions of the cerebrum (or “forebrain”) dedicated to movement, like the motor cortex and the basal ganglia. And you can do a lot of movement using just those!

Even in the rare cases known as cerebellar agenesis, where a person is born totally lacking a cerebellum123, movement is still possible, just impaired: slow motor and speech development in childhood, abnormal spoken pronunciation, wobbly limb movements, and mild-to-moderate intellectual disability. But not paralysis, and not even particularly bad disability overall — a lot of these people were able to live independently and work at jobs.

So…that’s weird.

What is the cerebellum’s job? It seems weird to have a whole separate organ for “make motor and cognitive skills work somewhat better.”

The other weird thing about the cerebellum is anatomical.

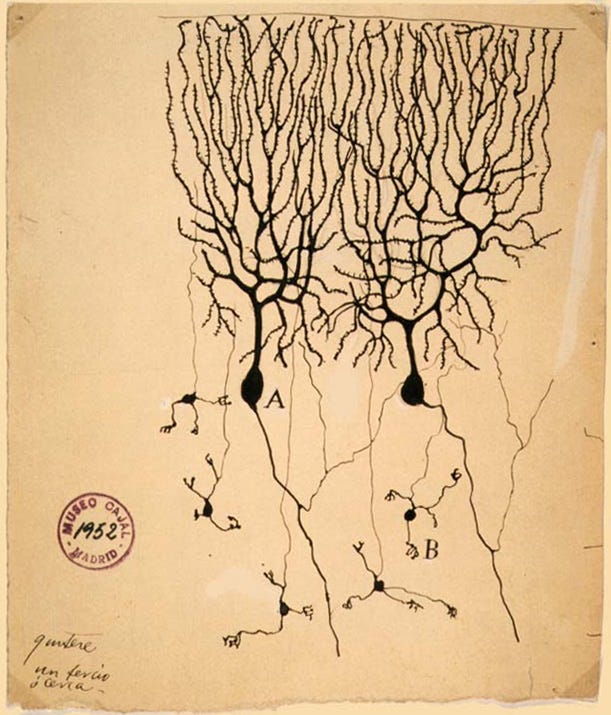

These very large, complex neurons are the Purkinje cells, which exist only in the cerebellum.

They have hundreds of synapses each, unlike the neurons of the cerebrum which only have a few.

Most of the other cells in the cerebellum are the small granule cells — in fact, they are so numerous that they comprise more than half of all neurons in the whole human brain. In total, the cerebellum contains 80% of all neurons!4

If you were an alien with a microscope who knew nothing about neurology, your first assumption would be “ah yes, the thinking happens in the cerebellum.”

Why is there so much neuronal complexity dedicated to….making movement a bit smoother and “higher” cognition a bit better?

The third weird fact is that the size of the cerebellum has been growing throughout primate evolution and human prehistory, faster than overall brain size.5

Great ape brains are distinguished from monkey brains by their larger frontal and cerebellar lobes. The Neanderthals had bigger brains than us but smaller cerebella. And, most strikingly, modern humans have much bigger cerebella than “anatomically modern” Cro-Magnon humans of only 50,000 years ago (but relatively smaller cerebral hemispheres!)

An alien paleontologist could be forgiven for assuming “ah yes, the cerebellum, the seat of the higher intellect.”

The cerebellum looks like it should have some crucial unique function. Something key to “what makes us human.” But what could it be?

The Cerebellum Is The Seat of Classical Conditioning

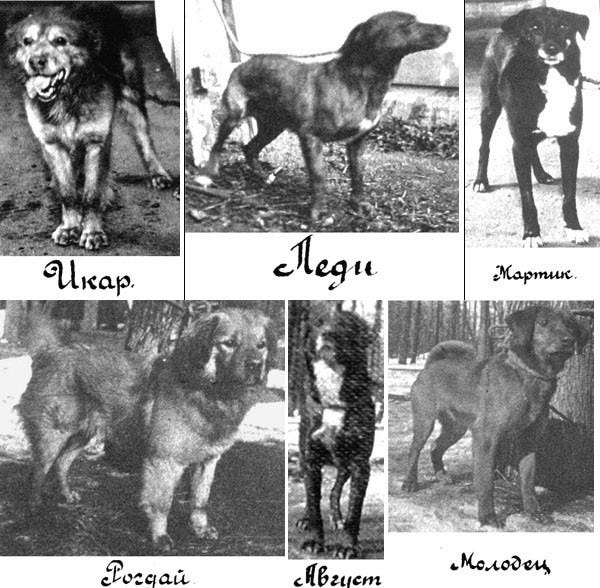

Classical conditioning — the thing that makes a dog salivate when it hears a bell it’s learned to associate with food — is a very low-level process.

You don’t need a lot of brain for classical conditioning.

You can classically condition the sea slug Aplysia, which has only 20,000 neurons, to flinch from a neutral sensation it’s learned to associate with a painful one.6

Even single cells can exhibit learning. The giant slime mold amoeba Physarum can be “trained” to cross a noxious part of a petri dish to reach food on the other side.7

However, in vertebrates, classical conditioning is highly localized in the nervous system: the cerebellum is necessary and sufficient for learning conditioned responses.8

A standard test of classical conditioning is the eyeblink test: can the subject learn to associate a neutral stimulus with an unpleasant one (like a puff of air to the eye) and automatically blink in response to the neutral stimulus.

If you lesion the cerebellum in animals, they no longer exhibit eyeblink conditioning. Humans with cerebellar damage also have no eyeblink conditioning.9

Conversely, if you want classical conditioning, all you need is a cerebellum — in fact, all you need for classical conditioning is the Purkinje cells!10

The eyeblink response is governed by the Purkinje cells. When an animal feels a puff of air to the eye, the Purkinje cells stop firing temporarily, resulting in an eyeblink.

So, in 2007, some Swedish researchers decided to study this response in isolation. Here’s how it works.

You take a “decerebrate” ferret — i.e. a ferret with the whole cerebrum severed from the rest of the brain (consisting of the brainstem and cerebellum). You condition individual Purkinje cells with electrode stimulation of two types of neurons that form their inputs: the climbing fibers (unconditioned stimulus) and the mossy fibers (conditioned stimulus.) Stimulating the climbing fibers (the same thing that happens naturally when a puff of air hits the eye) causes a temporary suppression of Purkinje cell firing; stimulating the mossy fibers does not. But, if the mossy fibers are stimulated right before the climbing fibers, the Purkinje neurons learn to anticipate the association and suppress their firing in response to the mossy fiber stimulation. This is a textbook example of classical conditioning.

Are cerebellar Purkinje cells the only individual neurons capable of single-cell learning?

Not in all animals; the sensory neurons of the sea slug Aplysia can be classically conditioned.11 But I haven’t been able to find a study in vertebrates of single-cell classical conditioning or associative learning outside the cerebellum. (Other regions of the brain, of course, are involved in learning, but the learning might be occurring through multicellular information like the relationships between nearby neurons’ firing patterns.)

Why do we care?

First of all, this gives us at least one “unique job” for the cerebellum: it is the place in the brain where conditioned associations are learned.

Second of all, it disproves the long-term potentiation theory that learning in the brain happens exclusively through strengthening synaptic connections between neurons. (The old dictum that “neurons that fire together, wire together.”) The brain is not like a neural network where the only thing that is “learned” or “updated” is the weights between neurons. At least some learning evidently happens within individual neurons.

That’s bad news for anyone hoping to simulate a brain digitally. It means there’s a lot more relevant stuff to simulate (like the learning that goes on within cells) than the connectionist paradigm of treating each biological neuron like a neural-net “neuron” would imply, and thus the computational requirements of simulating a brain are higher — maybe vastly higher — than connectionists hope.

On the other hand, intracellular learning is great news for neuroscientists trying to discover exactly how learning works inside a brain. If you’re studying an example of learning (or classical conditioning) that occurs within a single neuron, then the long-hypothesized “engram”, or physical object corresponding to a piece of newly learned information, has to reside inside that neuron. Something needs to physically or chemically change in that neuron, representing the newly learned information, and causing the corresponding change in the neuron’s firing behavior.

Cerebellar Neurons Learn Quantities

Not only can individual Purkinje cells in the cerebellum be classically conditioned, they can also learn information about the timing of stimuli.

If the time interval between the conditioned stimulus and unconditioned stimulus is varied, the Purkinje cells learn to suppress their firing at different times to match.12

In other words, if the Purkinje cell has “learned” from experience that an aversive stimulus will typically follow the neutral stimulus in exactly 50 milliseconds, then the Purkinje cell will delay roughly that long after receiving the neutral stimulus before pausing its firing. If the Purkinje cell “learns” a longer delay, it’ll wait longer before the pause.

This means that something inside the Purkinje cell is capable of representing number or quantity.

Cerebellar Purkinje cells, in other words, can count. (Or measure.)

Is The Cerebellum The Seat Of Measurement?

The fact that individual cerebellar Purkinje neurons contain the ability to measure quantities is highly suggestive about the function of the cerebellum, given the symptoms of cerebellar disease.

Dysmetria, or failure to estimate the right distance and/or speed to move, is a typical symptom of damage to the cerebellum. If measurement (especially motor timing) is localized to the cerebellum, then dysmetria as a cerebellar deficit makes perfect sense.

Other symptoms of cerebellar damage are easy to understand as consequences of dysmetria. Intention tremor (those big back-and-forth wobbles when the patient tries to move) might simply be what happens when dysmetria causes the movement to initially overshoot, and then an attempt to correct the overshoot itself overshoots and swings in the other direction, and so on. Nystagmus could be the same thing, with the muscles of the eye.

Even the non-motor symptoms of cerebellar damage, like poor performance on cognitive tests, have sometimes been referred to as a kind of “dysmetria of thought.”13

Cerebellar patients have trouble with planning tasks (like the Tower of Hanoi puzzle), spatial reasoning tasks, and executive function tasks; you might suppose that mentally “gauging” how long to spend doing things, or “relating” subtasks to the whole, as well as understanding how objects fit together in space, are aspects of mental “measurement”.

Cerebellar patients have normal abilities to make grammatical sentences, but make strange errors in generating logical sentences.14 Things like:

“It’s a big job, but it’s not easy.”

“If you drive, it will be less crowded”

“Although it’s the wrong size for you, I’ll ask to have one that will fit you.”

“I never thought I would meet you here; Nor did I, because everything seems so fresh here to buy.”

Conjunctions (like “but”, “although”, “because”, “if”) represent particular logical relationships between parts of sentences. The cerebellar patients’ errors imply a specific impairment in the ability to make those conjunctional relationships.

A sentence that begins “it’s a big job, but” ought to end with something that would ordinarily seem to conflict with the claim “it’s a big job”; the cerebellar patient instead ended the sentence with something that typically reinforces the claim (“big jobs” are usually “not easy”). This error violates the expected logical relationship between clauses.

In a sense this is analogous to a problem in “spatial” or “part-whole” relating — the cerebellar patient has trouble constructing sentences whose clauses relate in the right way to match the conjunction between them. It’s analogous to the way cerebellar patients have the basic sensorimotor ability to do all the same individual movements that healthy people do, but they have trouble sequencing them fluently, “putting the pieces together” in the right way.

Of course, other areas of the brain are involved in “measurement” or “quantity” activities too. Visual areas of the brain “measure” spatial distances, auditory areas “measure” pitch and rhythm, various parts of the cerebral cortex are active in mental arithmetic, etc. So it’s not that the cerebellum is the sole region that “measures” or “relates” things. But there is something measurement-related going on.

Is The Cerebellum The Home of Anticipation?

Another broad theory of what the cerebellum is “for” is anticipation or preparation.15

This fits well with the fact that classical conditioning happens in the cerebellum. Classical conditioning consists of learning to expect one stimulus to follow another, and respond in anticipation of the expected stimulus.

It also fits well with the cerebellum’s role in motor planning and sequencing. Fluent movement requires unconsciously, rapidly forming intentions to do multiple different things in sequence without having to stop to think between steps.

Gordon Holmes (1939) quotes a cerebellar-lesioned patient as saying, "The movements of my left (unaffected) arm are done subconsciously, but I have to think out each movement of the right (affected) arm. I come to a dead stop in turning and have to think before I start again."

This kind of cerebellar damage symptom represents a failure of the anticipatory or preparatory function that automatically “gets ready” for the next step before the last one is complete.

Patients with cerebellar damage have impaired ability to switch their attentional focus (for instance, between a task that requires watching for visual cues and one that requires listening for auditory cues) but unimpaired ability to perform similar tasks that don’t require shifting focus. Patients with brain lesions outside the cerebellum didn’t have problems with task-switching.

This also suggests that the cerebellum is involved in the “readiness” or “anticipation” prior to making a mental action.

Also, experimentally stimulating the cerebellum in animals makes them more sensitive to subsequent sensory stimulus, further supporting the hypothesis that the cerebellum helps organisms “prepare to pay attention”.

The Purkinje neurons of the cerebellum, in particular, are involved in “preparatory” motor adjustments, such as altering one’s grip on an object in anticipation of the experimenter moving it.

If the function of the cerebellum is fully general “anticipatory” or “predictive” modeling, this would explain why it’s so important, especially in primate and hominid evolution. Dexterity (tool use, throwing) has obviously been selected for in our primate and hominid ancestors, and so have the general cognitive abilities to predict and make sense of a changing world.

It also would explain why people without a cerebellum are still capable of most of the same tasks as healthy people, just with worse performance.

People who lack a cerebellum are wholly incapable of eyeblink classical conditioning, but they’re not wholly incapable of learning or memory; they learn new skills slowly but they do learn them and they don’t have total amnesia.

This would make sense if they are unable to learn to instantly anticipate context to prepare for upcoming actions, but they are able to learn and form memories through a slower, noisier route using the cerebrum. They can perform most of the same skills as healthy people, but they have to adjust “by trial and error” instead of using anticipation to get things right the first time, so they’re slower and less accurate.

Another way of phrasing this is that the cerebellum is a forward model of the consequences of action.16

The cerebellum receives an efference copy of motor commands generated by the motor cortex, and uses its model to predict the sensory consequences; it can then compare predicted vs. actual data and error-correct. A fast, subconscious path for sensorimotor prediction and learning (including classical conditioning) is confined to the cerebellum alone; a slower path includes both the cerebellum and cerebrum.

It takes hundreds of milliseconds to consciously perceive sensory information — far too slow a timescale to allow finely tuned and responsive motion that adapts to sensory feedback. Motion in real time has to be shaped and controlled by something faster than sensory processing through the long chains of neurons used for e.g. image recognition in the cortex — but moving totally “blind” without feedback control from anything would result in unacceptably crude, sloppy, choppy movement. The solution, perhaps, is the “virtual reality” generated by the cerebellum, a predictive model of the world that runs faster than the senses.

The Cerebellum In Other Animals: Active Sensing?

All vertebrates have a cerebellum.17

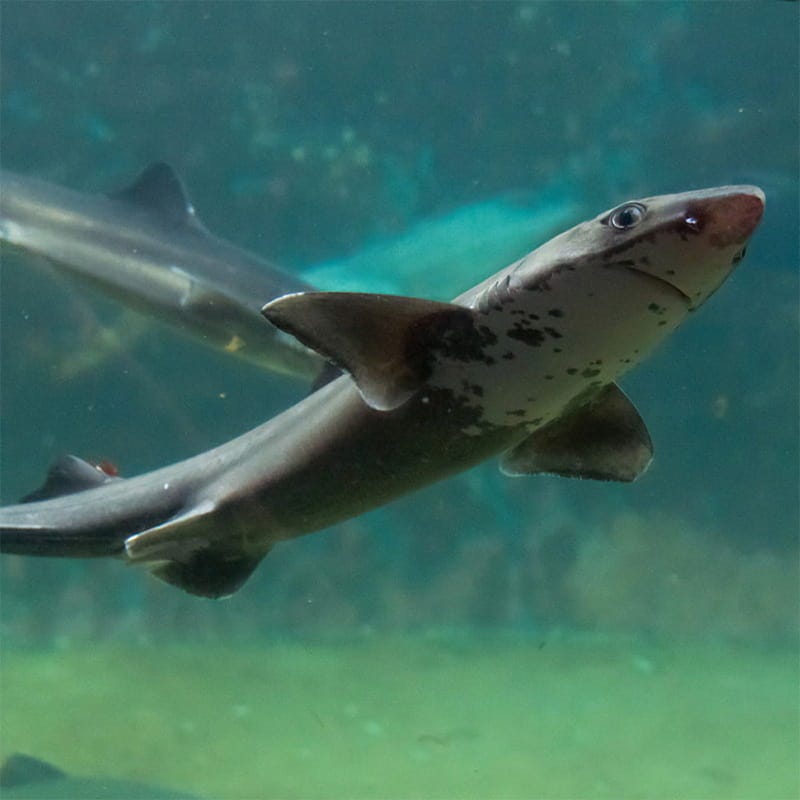

If you remove the cerebellum from a dogfish, it can still swim, but it has a tendency to “stall” and difficulty judging its turns, so that it often bumps into the sides of the tank.

The cerebellum is especially enlarged in fish with electrolocation abilities, like the Peters’s elephant-nose fish Gnathonemus petersii, an African freshwater fish that uses electricity-sensing receptors all over its body to navigate around obstacles.

This fish uses its huge cerebellum to monitor the electrical signatures of moving objects in the water around it. Particular Purkinje cells in the elephant-nose fish cerebellum are sensitive to particular target distances and speeds, just as particular neurons in the mammalian visual cortex are sensitive to the shape, location, and angle of visual features.

This unusual electrosensing function reinforces that the cerebellum is not just a motor control organ, but has a more general function related to spatial and environmental awareness.

Monotremes such as platypuses also have an unusually large cerebellum; like the elephant-nose fish, the platypus is electrosensitive (in its beak) which it uses to detect swimming prey in the water.

Aquatic mammals — whales, dolphins, seals, and sea lions — are also notable for their enlarged cerebellums, especially the baleen whales. In marine mammals, the cerebellum is used for echolocation. Echolocating bats also use the cerebellum for navigation, though their overall cerebellum size relative to brain size is small.

In many mammals, large areas of the cerebellum are devoted to processing sensory and motor information for parts of the body that are particularly dexterous and used in exploration: the snout in rodents, the hands in primates, the tip of the tail in arboreal monkeys.

Across animals, the cerebellum seems to be involved in both motion and sensory perception, and intriguingly, seems to be particularly enlarged in animals that use echolocation or electrosensing in the water, for spatial awareness of object locations in all directions.

This is suggestive of something like “spatial world modeling” going on in the cerebellum, and is consistent with the theory that the cerebellum’s job is anticipation and preparation.

Echolocation and electrosensing are both sensory modalities that involve an organism generating a “field” around itself (of electric or sound waves) and perceiving objects in the patterns of disruption in that field. Unlike vision, hearing, and smell, they are “active sensory systems”, in which the organism can control the intensity, direction, and timing of the “probe” signal (the sound or electric signal they emit).

Touch can have an analogous “actively controllable” quality, as the animal reaches out a snout, hand, or tail to explore its nearby surroundings. But truly active sensory systems, unlike touch, allow an animal to explore and probe at a distance, and gain a 3D model of its whole surrounding world.

While humans don’t have these kinds of sensory systems, it may provide some intuition for what our cerebellum is doing; perhaps building implicit anticipations of where everything in the physical world around us, and how it will respond if “poked”.

The Structure of the Cerebellum

If you try to map regions of the cerebellum by function and by functional connectivity18 you get a close one-to-one match with the cerebrum, even down to the localization of language in one hemisphere (the left hemisphere of the cerebrum and the right hemisphere of the cerebellum; everything’s flipped.)19

The only parts of the cerebral cortex without a corresponding cerebellar region are the auditory and visual cortices. (Suggestive, given that hearing and vision are passive senses, and my hypothesis in the previous section that the cerebellum has something to do with active sensing.)

The cerebellum has a repeated, almost crystal-like neural structure: it’s divided into multiple identical parallel modules. 20

The Purkinje cells (PC, in orange) project down into the core of the cerebellum, where they connect to deep nuclei. Climbing fibers (red) feed back up to the Purkinje cells. Mossy fibers (yellow) also feed into the Purkinje cells indirectly, via the granular cells (beige), and the Golgi, stellate, and basket cells (blue). The blue cells inhibit the Purkinje cells, while the red, yellow, and beige ones are excitatory.

Basically, this is a feedforward excitatory chain, plus inhibitory feedback loops. The main chain goes:

Afferent neurons (receiving input from the rest of the brain) →

mossy fibers →

granular cells →

parallel fibers →

Purkinje cells →

output to the rest of the brain

but then there are lots of additional loops where this pathway can be self-inhibiting.

The primary feedforward chain, though, is a reasonable candidate for the mechanism behind the super-fast “forward model” that generates predictions to inform action faster than sensory processing can generate conscious perceptions.

On a slightly larger scale, nearby patches of neurons in the cerebellum form pretty much self-contained modules without much connection to cells in other modules.

This is in contrast to the cerebral cortex, which varies a lot in cell composition between regions, has lots of recurrent loops, and lots of cross-connections between neurons from different local columns. The cerebellum is “one and done” — information goes in, through the Purkinje cells, and out.

There are lots of different such modules, looping the cerebellum together with different parts of the cerebrum.

Loops through the parietal lobes are involved in visual-motor coordination (like reaching the hand out to grasp something.)

Loops through the oculomotor cortex are involved in controlling eye movements.

Loops through the prefrontal cortex are involved in control of attention and working memory, fear extinction learning21, mental preparation of imminent actions, and procedural learning.

There are other loops (including through the basal ganglia and limbic system) but these have less well understood functions.

The independence of cerebellar modules makes sense given the need for speed — you can’t have long chains of interconnected neurons messing around if you want to give near-real-time model responses to control immediate action.

Rethinking Intelligence

People often talk as though “higher” intelligence lives in the cortex, especially the frontal lobes. The “hindbrain” is for boring, animal stuff, like controlling heart rate and hormones. Real thinking happens behind your noble brow.

This picture, it turns out, is wrong.

Modern Homo sapiens is as much characterized by our big cerebellums as by our big frontal lobes.

Even the most iconically “thought-like” thinking — the phonological loop, i.e. imagined or inner speech — passes through both the cerebrum and cerebellum. (Speech, after all, is motion, and imagined speech is simulated motion. I can literally feel subtle movements and tension in my tongue and jaw when I think.)

Most things we do have a cerebellar component, what some neuroscientists call a cerebellar transform22, smoothing and tuning and relating and fluently switching between the basic building-block abilities (sensory perception, motion, comprehension) that reside in the cerebrum.

The cerebellum regulates rate, rhythm, speed, contextual appropriateness; damage it, and the same building block actions are still possible, but all out of whack, clumsy and disproportionate.

This is consistent with the “embodied cognition” worldview where sensorimotor functions are on a continuum with or of the same kind as cognitive functions; thought is just “inner” motion and/or “inner” sensation. (And even abstract thought is built up of motions and sensations, via analogy or metaphor.)

The cerebellum may also inspire artificial-intelligence approaches somewhat, especially approaches to robotics or other control, in that it may be be beneficial to include a fast feedforward-only predictive modeling step to control real-time actions, alongside a slower training/updating pathway for model retraining. (I may formalize this more in a later post).

Timmann, D., et al. "Cerebellar agenesis: clinical, neuropsychological and MR findings." Neurocase 9.5 (2003): 402-413.

Yu, Feng, et al. "A new case of complete primary cerebellar agenesis: clinical and imaging findings in a living patient." Brain 138.6 (2015): e353-e353.

Ronconi, Luca, et al. "When one is enough: impaired multisensory integration in cerebellar agenesis." Cerebral cortex 27.3 (2017): 2041-2051.

Haldipur, Parthiv, Kathleen J. Millen, and Kimberly A. Aldinger. "Human cerebellar development and transcriptomics: implications for neurodevelopmental disorders." Annual Review of Neuroscience 45 (2022): 515-531.

Weaver, Anne H. "Reciprocal evolution of the cerebellum and neocortex in fossil humans." Proceedings of the National Academy of Sciences 102.10 (2005): 3576-3580.

Hawkins, Robert D., et al. "Classical conditioning of the Aplysia siphon-withdrawal reflex exhibits response specificity." Proceedings of the National Academy of Sciences 86.19 (1989): 7620-7624.

Tang, Sindy KY, and Wallace F. Marshall. "Cell learning." Current Biology 28.20 (2018): R1180-R1184.

Thompson, Richard F. "Neural mechanisms of classical conditioning in mammals." Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences 329.1253 (1990): 161-170.

Topka, Helge, et al. "Deficit in classical conditioning in patients with cerebellar degeneration." Brain 116.4 (1993): 961-969.

Jirenhed, Dan-Anders, Fredrik Bengtsson, and Germund Hesslow. "Acquisition, extinction, and reacquisition of a cerebellar cortical memory trace." Journal of Neuroscience 27.10 (2007): 2493-2502.

Walters, Edgar T., and John H. Byrne. "Associative conditioning of single sensory neurons suggests a cellular mechanism for learning." Science 219.4583 (1983): 405-408.

Johansson, Fredrik, et al. "Memory trace and timing mechanism localized to cerebellar Purkinje cells." Proceedings of the National Academy of Sciences 111.41 (2014): 14930-14934.

Schmahmann, Jeremy D. "Dysmetria of thought: clinical consequences of cerebellar dysfunction on cognition and affect." Trends in cognitive sciences 2.9 (1998): 362-371.

Guell, Xavier, Franziska Hoche, and Jeremy D. Schmahmann. "Metalinguistic deficits in patients with cerebellar dysfunction: empirical support for the dysmetria of thought theory." The Cerebellum 14 (2015): 50-58.

Courchesne, Eric, and Greg Allen. "Prediction and preparation, fundamental functions of the cerebellum." Learning & memory (1997).

D'Angelo, Egidio, and Stefano Casali. "Seeking a unified framework for cerebellar function and dysfunction: from circuit operations to cognition." Frontiers in neural circuits 6 (2013): 116.

Paulin, Michael G. "The role of the cerebellum in motor control and perception." Brain, behavior and evolution 41.1 (1993): 39-50.

Two regions of the brain are said to be “functionally connected” if brain activity correlates in those regions, as measured by fMRI.

Klein, A. P., et al. "Nonmotor functions of the cerebellum: an introduction." American Journal of Neuroradiology 37.6 (2016): 1005-1009.

D'Angelo, Egidio, and Stefano Casali. "Seeking a unified framework for cerebellar function and dysfunction: from circuit operations to cognition." Frontiers in neural circuits 6 (2013): 116.

i.e. learning to stop fearing associated stimuli after discovering the danger is gone

Guell, Xavier, John DE Gabrieli, and Jeremy D. Schmahmann. "Embodied cognition and the cerebellum: perspectives from the dysmetria of thought and the universal cerebellar transform theories." Cortex 100 (2018): 140-148.

Nice writeup! I’m gonna do the annoying thing where I self-promote my own cerebellum theory, and see how it compares to your discussion :) Feel free to ignore this.

My theory of the cerebellum is very simple. (Slightly more details here — https://www.lesswrong.com/posts/Y3bkJ59j4dciiLYyw/intro-to-brain-like-agi-safety-4-the-short-term-predictor#4_6__Short_term_predictor__example__1__The_cerebellum )

MY THEORY: The cerebellum has hundreds of thousands of input ports, with 1:1 correspondence to hundreds of thousands of corresponding output ports. Its goal is to emit a signal at each Output Port N a fraction of a second BEFORE it receives a signal at Input Port N. So the cerebellum is like a little time-travel box, able to reduce the latency of an arbitrary signal (by a fixed, fraction-of-a-second time-interval), at the expense of occasional errors. It works by the magic of supervised learning—it has a massive amount of information (context) about everything happening in the brain and body, and it searches for patterns in that context data that systematically indicate that the input is about to arrive in X milliseconds, and after learning such a pattern, it will fire the output accordingly.

Out of the hundreds of thousands of signals that enter the cerebellum time machine, some seem to be motor control signals destined for the periphery; reducing latency there is important because we need fast reactions to correct for motor errors before they spiral out of control. Others seem to be proprioceptive signals coming back from the periphery; reducing latency there is important for the same reason, and also because there’s a whole lot of latency in the first place (from signal propagation time). I’m a bit hazy on the others, but I think that some are "cognitive"—outputs related to attention-control, symbolic manipulation, and so on—and that reducing latency on those allows generally more complex thinking to happen in a given amount of time.

OK, now I’m going to go through your article and try to explain everything in terms of my theory. See how I do…

MOTOR SYMPTOMS: As above, without a cerebellum, you’re emitting motor commands but getting much slower feedback about their consequences, hence bad aim, overcorrection and so on.

ANATOMY: Briefly discussed at my link above, including links to the literature, but anyway, without getting into details, I claim that the configuration of Purkinje cells, climbing fibers, granule cells, etc. are plausibly compatible with my theory. It especially explains the remarkable uniformity of the cerebellar cortex, despite cerebellar involvement in many seemingly-different things (motor, cognition, emotions).

SIZE OF CEREBELLUM GROWING FASTER THAN OVERALL BRAIN SIZE IN HUMAN PREHISTORY: A big cerebellum presumably allows it to be a time-machine for more signals, or a better (less noisy) time-machine for the same number of signals, or both. Which is it? My low-confidence guess is "more signals"; I think it’s time-machining prefrontal cortex outputs (among others), and the number of such signals grew a lot in human prehistory, if memory serves. But it could be other things too.

CLASSICAL CONDITIONING: I don’t think your claim “the cerebellum is necessary and sufficient for learning conditioned responses” is true. I think it’s necessary and sufficient for eyeblink conditioning specifically, and some other things like that. For example, fear conditioning famously centers around the amygdala. I know you were quoting a source, but I think the source was poorly worded—I think it was specifically talking about eyeblink conditioning and "other discrete behavioural responses for example limb flexion", as opposed to ALL classical conditioning.

But anyway, for eyeblink conditioning, there seems to be a little specific brainstem circuit that goes (1) detect irritation on cornea, (2) use the cerebellum to time-travel backwards by a fraction of a second, (3) blink. Step 2 involves the cerebellum searching through all the context data (from all around the brain) for systematic hints that the cornea irritation is about to happen (a.k.a. supervised learning), and thus the cerebellum will notice the CS if there is one.

INDIVIDUAL PURKINJE CELLS CAN LEARN INFORMATION ABOUT THE TIMING OF STIMULI: If evolution is trying to make an organ that will reliably emit a signal 142 milliseconds before receiving a certain input signal, on the basis of a giant assortment of contextual information arriving at different times, then this kind of capability is obviously useful.

CEREBELLAR PATIENTS MAKE STRANGE ERRORS IN GENERATING LOGICAL SENTENCES: As above, I think there are cortex output channels that manipulate other parts of the cortex (via attention-control and such), and the cerebellum learns to speed up those signals like everything else, and this effectively allows for more complex thoughts, because there is only so much time for a "thought" to form (constrained by brain oscillations and other things), and the signals have to do whatever they do within that restricted time. I acknowledge that I’m being vague and unconvincing here.

ANTICIPATION: self-explanatory—this part is where you’re closest to my perspective, I think.

ELECTROLOCATION: I don’t know why electrolocation demands a particularly large cerebellum. Maybe latency is really problematic for some reason? Maybe the patterns are extremely complicated and thus require a bigger cerebellum to find them?

If you want some examples of the cerebellum in computational brain models, including modelling beyond just weights between neurons, the lab I did my master's in built some models https://github.com/ctn-waterloo/cogsci2020-cerebellum

The author of that paper would be happy to chat with you if you're interested, including how it features in a model of the motor control system https://royalsocietypublishing.org/doi/full/10.1098/rspb.2016.2134?rss=1